Introduction

Raya is a free, feature-rich and extensible CTF platform written in Rust. It's designed to be self-hosted and work with Docker or Kubernetes to spin up per-user challenge instances. You can also use Lua extensions to tweak and extend how the platform behaves.

Overview

Once you've set up the platform, you can start by creating an event for your contest or a lab for practice challenges. Users can sign up, join a team, and register for the event. After that, you can add challenges to the event or lab you made. When the event kicks off, users will see the challenges and can deploy their own unique instance for each one. For example, if you're hosting web challenges, every user gets a personal link to their own instance. This is handled through Docker or Kubernetes.

This setup lets you do cool stuff like:

- Generate a unique random flag for each user.

- Set up dynamic environment variables based on user or team info.

- Keep track of user activity for each instance.

Note: Raya is not released yet, I'm still working on implementing some features, and I plan to refactor the extensions subsystem.

Installtion

As a container

You can run raya as a container, but you must configure it properly so that players can deploy instances

docker pull ghcr.io/mohammedgqudah/rayactf:latest

docker pull ghcr.io/mohammedgqudah/rayactf-fe:latest

Using cargo (WIP)

You can build it from source using cargo

cargo install rayactf

Using pre build binaries on github

Setup

Config

By default, Raya looks for the configuration file in /etc/rayactf/rayactf.toml. You can override this default location by specifying a custom path with the --config option:

rayactf --config ./rayactf.toml

Reference

# `config_version` indicates the version of Rayactf when the configuration was last written.

# This helps avoid issues when upgrading, ensuring that new changes don't unexpectedly break your setup.

# For example:

# 1. The config was written with version 0.1.0.

# 2. You upgrade Raya to version 0.2.0, but 0.2.0 changes the default value of a config you didn't modify.

# 3. Because your `config_version` is still 0.1.0, the old default value is kept, allowing you to upgrade safely and update the config later.

config_version = "0.1.0"

# Data directory for raya, this includes extensions, container images and uploads

base_dir = "/var/lib/rayactf"

[server]

port = 3000

host = "0.0.0.0"

[extensions]

# Enable managing extensions using a web editor in the admin dashboard.

enable_over_http = true

[database]

# Adding this here is fine locally but its recommened to use an environment variable for secrets.

# use `RAYA_DATABASE_URL`

url = "postgres://<USER>:<PASSWORD>@localhost:5432"

[docker]

# If Raya itself is running inside a container, you should mount the socket from the host.

# `/var/run/docker.sock:/var/run/docker.sock`

socket = "/var/run/docker.sock"

Docker

Docker is the easiest option to implement instances, to get started, make sure you have Docker installed on the host machine.

When creating a Docker challenge, you can select a container image from the list of images available on your machine. You'll also need to write a challenge Lua script, where you're expected to set raya.docker_options and specify at least the container's port. When an instance is requested, a new container is launched with an ephemeral port assigned automatically.

Raya communicates with the docker daemon using the unix socketunix:///var/run/docker.sock.If you are running Raya inside a container, make sure to have the socket mounted as a volume. Check this example

Limitations

While Docker works well for most scenarios, you might want to consider using Kubernetes if:

- You need high availability and can't tolerate a single point of failure (The Docker implementation is limited to a single host).

- Your challenges are resource-intensive or you have too many challenges for a single host to handle.

- You want to assign a dynamic subdomain for each instance instead of exposing Docker's ephemeral ports.

Subdomains can be implemented with Docker if you use a reverse proxy on the host machine and configure it dynamically via your Lua script. However, Kubernetes makes this process much more straightforward.

Kubernetes

This integration is still under development and is not ready yet. I haven't written additional documentation because the design may change at any point.

Raya comes with a kubernetes controller for the RayaChallenge CRD, the controller will sync challenge instances with pods/services/ingresses. When a RayaChallenge is ready, users will be notified over a websocket that the instance is ready.

CRD Installation

Challenges

Machine Challenges

Dynamic Challenges

Dynamic points challenges are inspired by CTFd

\[ \text{new value} = \left\lceil \frac{\text{minimum} - \text{initial}}{\text{decay}^2} \cdot \text{solve_count}^2 + \text{initial} \right\rceil \]

Concepts

Events

An event represents a structured and timed competition, practice session, or training exercise hosted on the platform. It serves as a container for challenges that users participate in during a defined period. Each event has its own leaderboard. When registering for an event, users sign up as teams. You can also set a maximum team size when creating the event.

Labs

A lab is a space on the platform where users can practice and experiment with challenges at their own pace. Unlike events, labs aren’t timed or competitive.

You can set up a lab to focus on specific topics, techniques, or areas of interest.

Extensions

Lua plugins allow you to customize Raya and make your challenges unique. Plugins can access the raya API and perform tasks such as:

- Assigning a unique flag per instance

- Controlling environment variables

- Mounting volumes/devices to an instance

- Sending announcements

- Detecting cheating and disqualifying teams

- Extending monitoring by adding custom metrics (Raya supports Prometheus out of the box)

- Scaling up the infrastructure when the number of instances increases

These are just some common tasks you can perform. However, you can program your plugin to do much more. Take a look at recipes for some inspiration.

Recipes

A list of commonly requested extensions.

First blood announcer over Discord

-- /etc/rayactf/extensions/events/PostChallengeSubmission.lua

local lunajson = require 'lunajson'

local http = require("socket.http")

local ltn12 = require("ltn12")

local webhook_url = "https://discord.com/api/webhooks/<SECRET>/<SECRET>"

local function post_request(url, body)

local result, status_code, headers = http.request{

url = url,

method = "POST",

source = ltn12.source.string(body),

headers = {

["Content-Type"] = "application/json",

["Content-Length"] = tostring(#body),

},

}

if not result then

error("HTTP request failed")

end

end

local function send_discord_message(message)

post_request(webhook_url, lunajson.encode({

content = message

}))

end

-- First blood announcement

if raya.result.Correct and raya.challenge.solves == 0 then

local name = ""

if raya.team then

name = raya.team.name

else

name = raya.user.name

end

send_discord_message(string.format(":drop_of_blood: First blood for **%s** goes to **%s**", raya.challenge.name, name))

else

print("incorrect submission")

end

Unique flag per user (environment variable) | docker

-- /etc/rayactf/extensions/events/PreInstanceCreation.lua

local function generate_hex(length)

local hex = ""

for i = 1, length do

hex = hex .. string.format("%x", math.random(0, 15))

end

return hex

end

if raya.current_flag then

raya.log("Using the fixed flag value")

else

local flag = string.format("Raya{%s}", generate_hex(40))

raya.flag = flag

end

Unique flag.txt per user | docker

Some challenges have their flag stored in a text file instead of environment variables.

-- /etc/rayactf/extensions/events/PreInstanceCreation.lua

local function generate_hex(length)

local hex = ""

for i = 1, length do

hex = hex .. string.format("%x", math.random(0, 15))

end

return hex

end

-- If Raya itself is deployed as a container, make sure to mount /tmp to the host.

local path = string.format("/tmp/flag-%s.txt", generate_hex(20))

local file = io.open(path, "w")

local flag = string.format("Raya{%s}", generate_hex(40))

file:write(flag)

file:close()

raya.docker_options = {

port = "1234",

volumes = { string.format("%s:/flag.txt", path) }

}

raya.flag = flag

raya Lua API Reference

This document provides an overview of the raya Lua API, which allows users to interact with and extend the functionality of the raya platform. Certain methods are tied to specific events.

Note: I might refactor the extension subsystem so that it's similar to neovim, where an init.lua is loaded by the platform and then you can require more scripts and register events using raya.on

Table of Contents

General Functions

These functions are available in all event contexts.

log(message: string)

Logs a message to the raya platform's console or log file.

- Parameters:

message: A string containing the message to log.

- Example:

raya.log("Application started.")

Pre Instance Creation

docker_options: table

Sets the docker container options (used in docker challenges)

port(string): The container port you wish to publish.volumes(string[]): A list of volume mappings.devices(string[]): A list of devices to mount in the container.host_ip(string): The host IP address, the default is0.0.0.0.environment(string[]): A list of environment variables.- Example:

raya.docker_options = {

port = "3000",

volumes = {"/tmp/flag.txt:/app/flag.txt"},

environment = {"TEAM_NAME=<TEAM_NAME>", "MY_FLAG=FLAG{1234}"}

}

flag: string

Sets the instance flag.

- Example:

raya.flag = string.format("FLAG{%s}", random_hex(50))

description: string

Sometimes you may wish to add a dynamic description for an instance. For example, the instance may be protected using a random password and you want to show the connection details to the user.

-

description(string): Instance description (markdown is supported) -

Example:

raya.description = string.format([[

#### Connection information

Your password is: `%s`

]], random_password)

instance: table

Sets the instance options.

show_port(bool): Whether to show the allocated port next to the host.host(string[]): The host to show to the user, the default is127.0.0.1- Example:

raya.instance = {

host = "my_custom_domain.com",

}

Constants

raya.user(table)id(string)name(string)is_admin(bool)team(table)

Guides

Deploying with Docker

version: "3"

services:

rayactf:

image: ghcr.io/mohammedgqudah/rayactf:latest

environment:

RUST_LOG: 'raya_ctf=TRACE'

volumes:

- ./rayactf.toml:/etc/rayactf/rayactf.toml # config

- ./extensions:/etc/rayactf/extensions # extensions directory

- /var/run/docker.sock:/var/run/docker.sock # so that you can spawn docker instances

- /tmp/rayactf:/tmp/rayactf # tmp directory

ports:

- "3000:3000"

rayactf_fe:

image: ghcr.io/mohammedgqudah/rayactf-fe:latest

environment:

API_URL: 'http://95.179.139.160:3000'

ORIGIN: http://95.179.139.160

PORT: 80

ports:

- 80:80

- 443:80

db:

image: postgres:latest

volumes:

- ./pg-data:/var/lib/postgresql/data

environment:

POSTGRES_PASSWORD: '123456789'

etcd:

image: gcr.io/etcd-development/etcd:v3.5.17

container_name: etcd

command:

- /usr/local/bin/etcd

- --data-dir=/etcd-data

- --name

- node1

- --initial-advertise-peer-urls

- http://192.168.1.21:2380

- --listen-peer-urls

- http://0.0.0.0:2380

- --advertise-client-urls

- http://192.168.1.21:2379

- --listen-client-urls

- http://0.0.0.0:2379

- --initial-cluster

- node1=http://192.168.1.21:2380

Hosting PWN Challenges

Players can interact with your vulnerable binary over TCP (e.g., using netcat) if you run your binary behind socat.

Prepare your challenge's image

FROM ubuntu:latest

RUN apt-get update && apt-get install -y socat

COPY flag.txt /

COPY YOUR_BINARY /

RUN chmod +x /YOUR_BINARY

CMD ["socat", "TCP-LISTEN:12345,reuseaddr,fork", "EXEC:/YOUR_BINARY"]

Your challenge's Lua plugin can configure the port 12345 and optionally mount a random flag.txt if you want a dynamic flag.

Extra: Using a different GLIBC version.

You can change the interpreter and GLIBC version using patchelf.

FROM ubuntu:latest

RUN apt-get update && apt-get install -y socat patchelf

COPY flag.txt /

COPY libc.so.6 /

COPY ld-linux-x86-64.so.2 /

COPY YOUR_BINARY /

RUN chmod +x libc.so.6

RUN chmod +x ld-linux-x86-64.so.2

RUN chmod +x /YOUR_BINARY

RUN patchelf /YOUR_BINARY --set-rpath . --set-interpreter ld-linux-x86-64.so.2

CMD ["socat", "TCP-LISTEN:12345,reuseaddr,fork", "EXEC:/YOUR_BINARY"]

Write a challenge plugin (Docker)

After adding the challenge, you can add a PostInstanceCreation and a PreInstanceCreation script.

-- events/my-challenge-123/PreInstanceCreation.lua

local function generate_hex(length)

local hex = ""

for i = 1, length do

hex = hex .. string.format("%x", math.random(0, 15))

end

return hex

end

-- create a tmp file on the host containing the flag

local path = string.format("/tmp/flag-%s.txt", generate_hex(20))

local file = io.open(path, "w")

local flag = string.format("ASU{%s}", generate_hex(40))

file:write(flag)

file:close()

-- set the port and mount the random flag.txt

raya.docker_options = {

port = "80",

volumes = { string.format("%s:/flag.txt", path) }

}

raya.flag = flag

-- events/my-challenge-123/PostInstanceCreation.lua

-- Update the instance description with the connection details

raya.description = string.format([[

```

nc chall.rayactf.local %s

```

]], raya.container.published_port)

Hosting Machine Challenges

TODO: Docker macvlan networking

Machine challenges can spawn a new environment of machines for players, which can be done using QEMU. Players will need to connect to a VPN (e.g., WireGuard) to access the dynamically created machines.

The VPN will grant users FULL access to your Docker network or Kubernetes pods network.For Kubernetes, you should write a NetworkPolicy to restrict users' access to machine challenges. For Docker, ensure that Raya and your databases are running on a separate Docker network.

VPN Setup (Docker)

I personally use WireGuard with wg-easy.

The server is configured to route traffic to the Docker network.

Update

10.254.0.0/16to match your Docker network.

WG_ALLOWED_IPS=10.8.0.0/24, 10.254.0.0/16

POST_UP

iptables -A FORWARD -i wg0 -j ACCEPT; iptables -A FORWARD -o wg0 -j ACCEPT; iptables -t nat -A POSTROUTING -o enp1s0 -j MASQUERADE; iptables -A FORWARD -i wg0 -d 10.254.0.0/16 -j ACCEPT; iptables -A FORWARD -i wg0 -s 10.254.0.0/16 -j ACCEPT

POST_DOWN

iptables -D FORWARD -i wg0 -j ACCEPT; iptables -D FORWARD -o wg0 -j ACCEPT; iptables -t nat -D POSTROUTING -o enp1s0 -j MASQUERADE; iptables -D FORWARD -i wg0 -d 10.254.0.0/16 -j ACCEPT; iptables -D FORWARD -i wg0 -s 10.254.0.0/16 -j ACCEPT

VPN setup (Kubernetes)

The setup is not different from the docker setup above, just change the docker network address to the Pods CIDR.

Be careful not to disrupt your CNI. For instance, if you're using Calico, it might be configured to use the first detected network interface to determine the node IP. It's possible that wg0 could be the first detected. To avoid this, add a regular expression to skip wg.* interfaces

Machine image

Currently, I deploy machine challenges as a docker container that runs QEMU, the image has a copy of the machine, each time a container is started virt-customize updates the machine image (patch flag.txt so that it has a dynamic flag / add a dynamic user / add a machine environment variable / etc..)

FROM debian:12-slim AS builder

RUN apt-get update && \

apt-get install -y qemu-system-x86 libguestfs-tools pwgen && \

rm -rf /var/lib/apt/lists/*

# The OVA file of the challenge

COPY ctf-image.ova /root/ctf-image.ova

RUN tar -xvf /root/ctf-image.ova -C /root/

RUN rm /root/ctf-image.ova

RUN qemu-img convert -f vmdk -O qcow2 /root/*.vmdk /root/ctf-image.qcow2

RUN rm /root/*.vmdk

FROM debian:12-slim AS runner

RUN apt-get update && \

apt-get install -y qemu-system-x86 libguestfs-tools pwgen && \

rm -rf /var/lib/apt/lists/*

COPY --from=builder /root/ctf-image.qcow2 /root/ctf-image.qcow2

# Environment variables

ENV VM_MEMORY=1G

ENV VM_CPU=1

# Start script to set up and run the VM

COPY start_vm.sh /root/start_vm.sh

RUN chmod +x /root/start_vm.sh

# Run the start script

CMD ["/root/start_vm.sh"]

#!/bin/bash

VM_IMAGE="/root/ctf-image.qcow2"

echo "Generated SSH password for ctf-player: $SSH_PASSWORD" > /root/ssh_password.txt

echo "Generated SSH password for ctf-player: $SSH_PASSWORD"

# Add a player user

virt-customize --format qcow2 -a "$VM_IMAGE" --password "ctf-player:password:$SSH_PASSWORD"

# Add flag.txt

echo "$FLAG" > flag.txt

# Add a dynamic flag.txt to the machine

virt-customize --format qcow2 -a "$VM_IMAGE" --upload flag.txt:/home/ctf-player/flag.txt

# change the Redis password

virt-customize --format qcow2 -a "$VM_IMAGE" --run-command "sed -i 's/^requirepass .*/requirepass $FLAG/' /etc/redis/redis.conf"

# Ports used by the machine

PORTS_TO_FORWARD=("22" "8000" "8080" "443")

# build hostfwd arguments

HOSTFWD_ARGS=""

for PORT in "${PORTS_TO_FORWARD[@]}"; do

HOSTFWD_ARGS="$HOSTFWD_ARGS,hostfwd=tcp::${PORT}-:${PORT}"

done

# trim the leading comma

HOSTFWD_ARGS="${HOSTFWD_ARGS#,}"

qemu-system-x86_64 -hda "$VM_IMAGE" -m "$VM_MEMORY" \

-enable-kvm -smp "$VM_CPU" -boot c -nographic -serial mon:stdio \

-device e1000,netdev=net0 \

-netdev user,id=net0,$HOSTFWD_ARGS

PreInstanceCreation.lua

local function generate_hex(length)

local hex = ""

for i = 1, length do

hex = hex .. string.format("%x", math.random(0, 15))

end

return hex

end

local password = generate_hex(10)

raya.docker_options = {

devices = {"/dev/net/tun", "/dev/kvm"},

environment = {string.format("SSH_PASSWORD=%s", password)}

}

PostInstanceCreation.lua

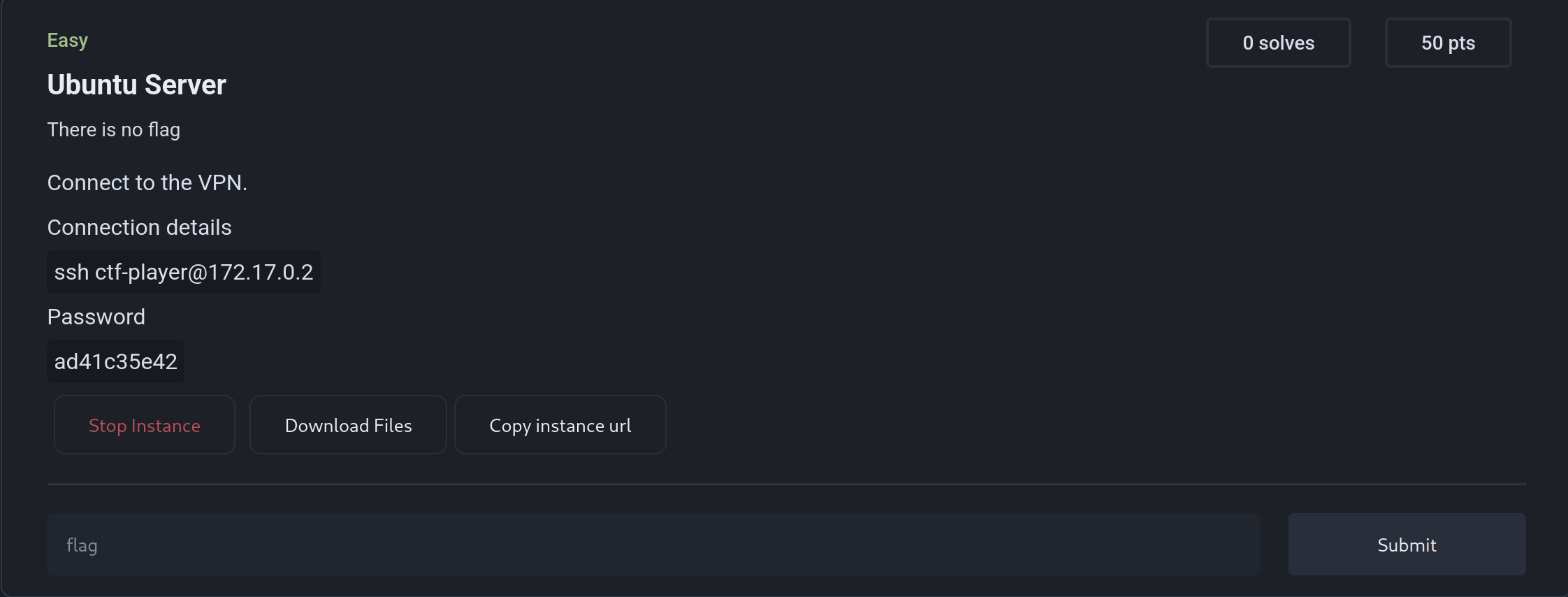

raya.description = string.format([[

Connect to the VPN.

#### Connection details

```sh

ssh ctf-player@%s

```

#### Password

```

%s

```

]], raya.container.container_ip_address, string.match(raya.docker_options.environment[1], "SSH_PASSWORD=(%w+)"))

raya.instance = {

show_port = false,

host = raya.container.container_ip_address

}

Screenshots